Understanding Alation Data Quality Architecture¶

Alation Cloud Service Applies to Alation Cloud Service instances of Alation

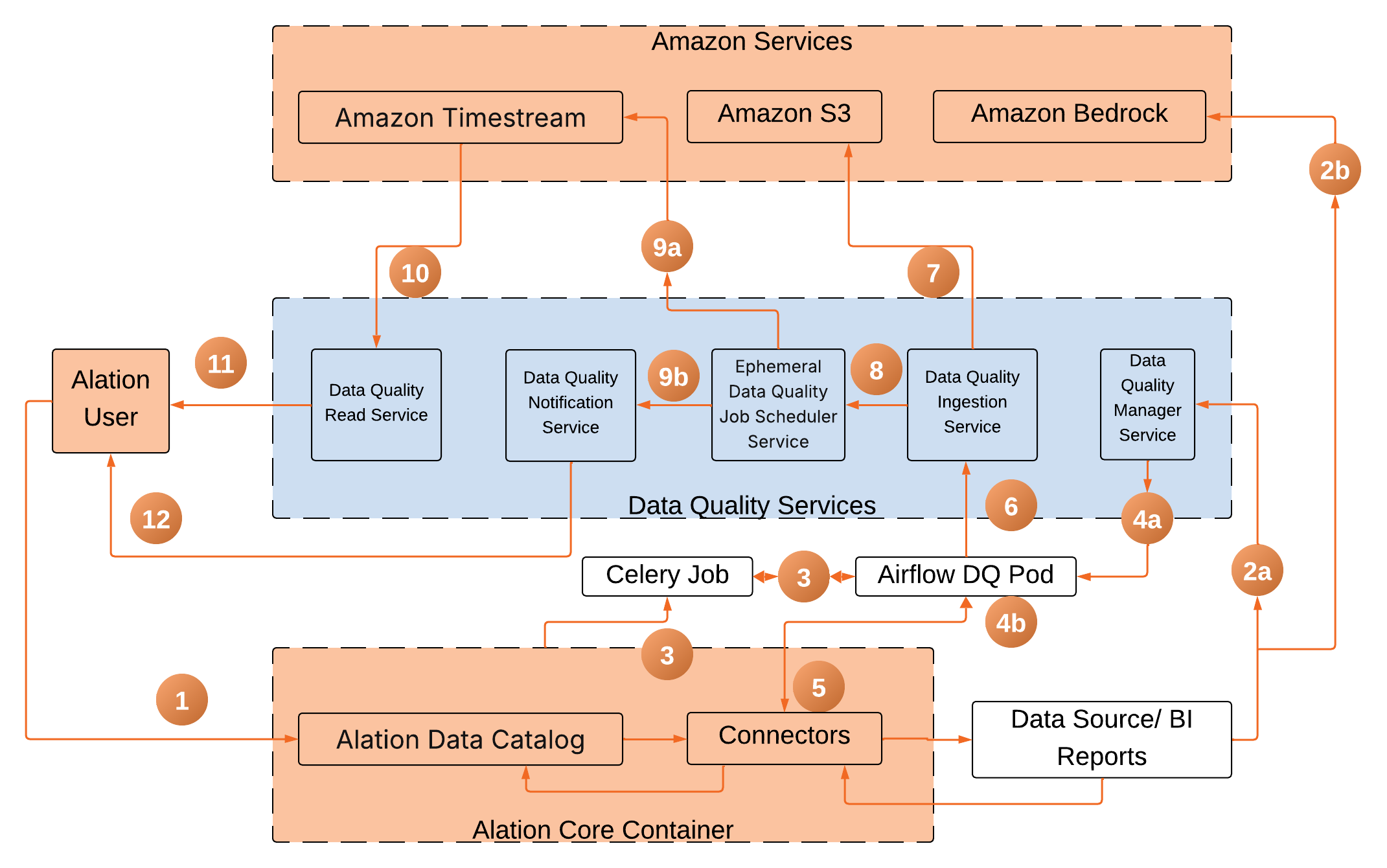

Alation Data Quality leverages a distributed microservices architecture designed specifically for Alation Cloud Service environments. The system integrates with the Alation Data Catalog and employs various specialized services to enable comprehensive data quality checking and reporting.

Alation Data Quality Monitoring Concepts¶

Understanding these fundamental concepts is essential for effective use of Alation Data Quality:

Check: A validation rule applied to specific data metrics, returning pass, fail, or error status based on defined thresholds. Examples include accuracy checks (validating numeric ranges), completeness checks (ensuring no missing values), or validity checks (format validation).

Monitor: A container grouping one or more checks tied to tables and their attributes, executing on a defined schedule.

Asset: A table and its columns from a data source or BI report actively monitored for quality metrics.

Health Score: An aggregated indicator reflecting the pass or fail ratio across all checks within a monitor or asset.

System Components and Architecture¶

The Alation Data Quality architecture is composed of several key components designed to ensure robust data governance and user experience. It integrates the Alation Core Container for cataloging and user interactions, the Data Quality Manager Service for configuring monitoring, and the Airflow DQ Pod for executing data quality checks. AI-powered recommendations are provided by Amazon Bedrock, while the Data Quality Ingestion Service handles the processing and storage of check results. Amazon Timestream stores historical metrics, and the Data Quality Notification Service manages all alerts.

The architecture consists of the following key components:

Alation Core Container: Houses the Data Catalog and coordinates user interactions.

Data Quality Manager Service: Stores and manages monitor configurations.

Airflow DQ Pod: Executes data quality checks using pushdown SQL queries.

Amazon Bedrock: Provides AI-powered check recommendations via Claude 3 Sonnet.

Data Quality Ingestion Service: Processes and stores check results.

Amazon Timestream: Time-series database for historical quality metrics.

Data Quality Read Service: Fetches the results from the Amazon Timestream and displays them in the user interface.

Data Quality Notification Service: Manages alerts and notifications.

Data Quality Incident Service: Manages the creation and linking of incidents to external ITSM systems like Jira.

Architecture¶

Monitor Creation: Includes manual checks, optional AI-Check Recommendation (using Bedrock), and optional data profiling.

Scheduled Monitor Execution (Service Account): The core “Airflow DQ Pod” flow that runs checks using the service account and can automatically trigger notifications and incidents.

Data Quality Score Presentation: Includes reading, calculating, and presenting data quality scores.

Interactive Data Analysis (Individual User Credentials): Includes on-demand flows (failed records and root cause analysis) that occur after execution and use the individual user’s credentials.

Monitor Creation¶

The user begins by interacting with the Alation Data Catalog (Alation Core Container), which contains the metadata of the cataloged data assets, to create a monitor (manual or SDK) on the Data Quality interface. Alation Data Catalog stores the metadata using the metadata extraction (MDE), lineage for the BI reports, and query log ingestion (QLI) from the data source connectors.

Alation Core Container interacts with the Data Quality Manager Service:

For manual checks, Alation Core Container sends and stores the monitor information to the Data Quality Manager database.

For AI-driven checks (Recommend Checks), the Alation Core Container sends the column name and column type to the Amazon Bedrock service, fetches the recommended checks and then stores the monitor information to the Data Quality Manager Database.

(Optional) During check configuration, user can optionally run data profiling on tables and columns.

Columns marked as sensitive or PII are flagged in profiling results.

Note

Alation Data Quality data profiling respects organization’s data governance policies and so sensitive data handling follows established classification rules.

(Optional) After check configuration, user can optionally add the anomaly metric type on target tables to detect anomalies and do analysis after the monitor run.

Scheduled Monitor Execution (Service Account)¶

When a user runs a monitor, the Alation Core Container creates a Celery job and calls the Airflow DQ Pod.

The Airflow DQ Pod fetches

The monitor definition from the Data Quality Manager.

The credentials from the data source connectors in the Alation Core Container.

The Airflow DQ Pod then creates a pushdown SQL query based on the check rules as defined by the user or recommended by AWS Bedrock and passes it to the Query Service of the data source connectors for execution. The Query Service executes the data quality checks against the target data source. It authenticates using the default service account configured for that data source. For a monitor to run successfully, this service account must have been granted

SELECTprivileges on all tables being monitored.The Airflow DQ Pod then fetches the results of the monitor run and sends them to the Data Quality Ingestion service

The Data Quality Ingestion Service stores the results in Amazon S3.

The Data Quality Ingestion Service calls the Ephemeral Data Quality job scheduler service.

The Ephemeral Data Quality scheduler service:

Creates one more pod on demand to store these results based on the timestamp in the Amazon Timestream database.

Send the data to the Data Quality Notification Service.

The Data Quality Incident Service creates a new ticket in the connected ITSM system (Jira) if auto-create incident setting is enabled for failed checks and detected anomalies.

Data Quality Score Presentation¶

Amazon Timestream then sends the results to the Data Quality Read Service.

The Data Quality Read Service sends the results to the user.

The Data Quality Notification Service sends the notifications and alerts to the user.

Interactive Data Analysis (Individual User Credential Based)¶

This flow occurs after a monitor has run and is initiated by a user reviewing the results. This model enforces user-level permissions, ensuring the user can only analyze data they are already authorized to see.

Note

Alation Data Quality does not store user credentials.

To diagnose the failure for checks, the user:

Link the failed check to an existing incident or create a new incident.

Performs Failed Record Analysis:

Provide their individual user account credentials to establish a direct connection to the data source.

Retrieves sample records.

Performs Root Cause Analysis:

Provide their individual user account credentials to establish a direct connection to the data source.

Retrieves sample records and masked data is sent to the LLM for root cause analysis.

To diagnose the detected anomalies, the user:

Link the detected anomalies to an existing incident or create a new incident.

Provide feedback to the LLM for detected anomalies.

External Execution Architecture (SDK)¶

Alation Data Quality supports an Open Data Quality architecture that allows monitors to be executed outside of the Alation platform while maintaining centralized governance. You must first create an SDK-Enabled Monitor in the Alation Data Quality application to define your checks. Then, you can use the Alation Data Quality SDK on PyPI to execute those checks programmatically in your external environment.

When using SDK-Enabled monitors:

Pushdown Execution: Queries run directly in your data warehouse (for example, Snowflake or BigQuery), not inside Alation.

Zero Data Extraction: The SDK ensures no data is extracted or stored by Alation. Computation happens in your data platform, and only the results (pass or fail status, metadata) are published back to the Alation catalog.

Dynamic Configuration: The SDK automatically fetches data source credentials and check definitions from Alation at runtime, requiring zero manual configuration in your pipeline code.